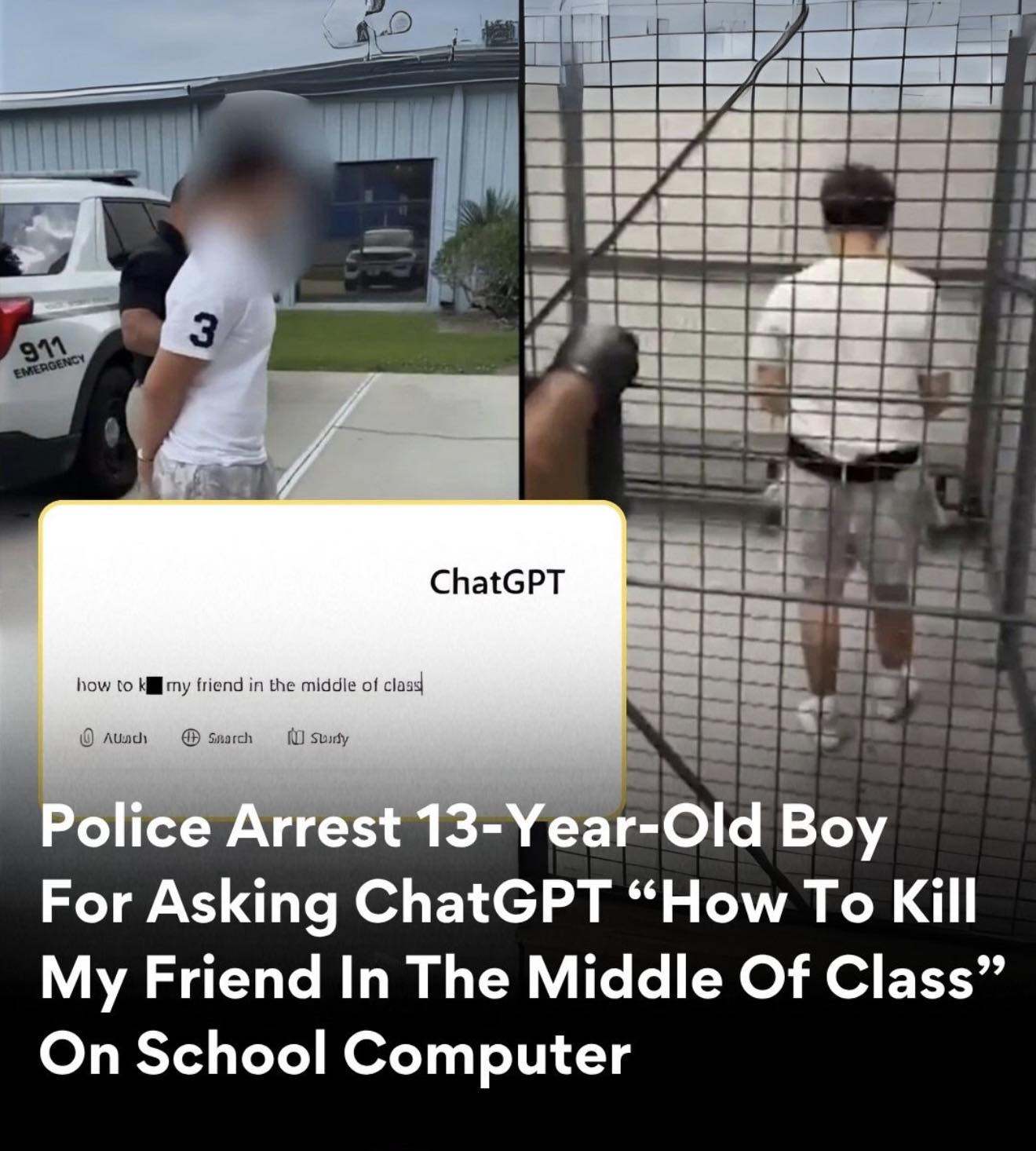

In a shocking incident that highlights the intersection of artificial intelligence and school safety, a 13-year-old student in Florida was arrested after typing the chilling question, “How to kill my friend in the middle of class,” into ChatGPT on a school-issued device. The event has ignited nationwide discussion about the role of AI monitoring tools in schools, their effectiveness, and their potential consequences.

The student’s query was immediately flagged by the AI-powered monitoring system, Gaggle, which is designed to detect potential threats or concerning behavior on school devices. Within moments, a school police officer was notified and arrived to detain the student. While the teenager later claimed that the message was “just trolling” a friend, the authorities treated the situation with extreme seriousness, especially given the tragic history of school shootings in the United States.

According to the Volusia County Sheriff’s Office, the student was arrested and booked. Officials stressed that even statements perceived as jokes or pranks cannot be taken lightly, particularly when they involve threats of violence. The swift response underscores how seriously schools and law enforcement now view potential threats, particularly in an era when AI surveillance tools are integrated into educational environments.

The incident has sparked widespread debate about AI monitoring software like Gaggle, which is used in schools across the country to detect harmful or suspicious student behavior. Proponents argue that such systems can prevent tragedies by flagging dangerous situations early, potentially saving lives. In this case, the rapid alert undoubtedly enabled school authorities to intervene before any harm could occur.

Critics, however, are concerned about the implications for student privacy and the potential for overreach. Many argue that Gaggle and similar AI monitoring tools can generate false positives, flagging harmless behavior as threatening. This, they claim, fosters a surveillance-state atmosphere in schools, where students may feel constantly monitored and unable to express themselves freely. Some worry that innocent jokes, exaggerated statements, or casual online discussions could inadvertently lead to arrests or disciplinary action.

Legal experts also weigh in on the matter, emphasizing the delicate balance schools must maintain between safety and student rights. “Schools have a responsibility to protect their students, but they also must ensure that measures like AI surveillance do not criminalize normal adolescent behavior,” says Dr. Karen Michaels, a specialist in education law. “The challenge lies in interpreting context, intent, and the seriousness of online statements, especially when AI tools are involved.”

The Florida case is a stark example of how AI technology is increasingly shaping real-world consequences. As schools integrate digital devices and monitoring systems into classrooms, students’ words and actions are constantly scrutinized by algorithms trained to detect potential threats. While the intention is to enhance safety, the approach raises ethical questions about autonomy, privacy, and the appropriate role of AI in educational settings.

For the student involved, the consequences have been immediate and serious. Arrests at such a young age can have lasting impacts, both legally and psychologically. Supporters of the intervention argue that early action is critical to prevent escalation and ensure the safety of the school community. However, civil liberties advocates caution against treating every AI-generated alert as an imminent threat without human context or investigation.

This incident also underscores the importance of educating students about responsible AI use. Adolescents often experiment online or make provocative statements without fully understanding the implications. As AI tools like ChatGPT become more widely used in schools, students need guidance on how their digital interactions may be monitored and how even “trolling” or joking can have serious repercussions.

Meanwhile, the debate around AI surveillance in schools continues to grow. Supporters cite numerous cases in which early detection prevented violence, arguing that AI monitoring is a valuable addition to traditional safety measures such as counselors, threat assessments, and human oversight. Critics contend that these systems may overstep, surveilling children’s thoughts and private communications, which could lead to anxiety, distrust, or punitive consequences for minor infractions.

In Volusia County, the local community has expressed mixed reactions. Parents, teachers, and students alike are grappling with questions about safety, privacy, and accountability. Social media platforms have lit up with discussion, as citizens debate whether AI tools are protecting children or creating an environment of constant surveillance. Some call for stricter regulations on AI monitoring in schools, while others insist that any measure preventing potential tragedies is worth the trade-off.

Experts also emphasize the need for schools to balance AI detection with human judgment. Technology alone cannot always interpret sarcasm, intent, or context. As in this case, the system flagged a message as potentially dangerous, but human review was required to determine how authorities should respond. The combination of AI and human oversight is crucial to ensure both safety and fairness.

This Florida incident is part of a broader national conversation about AI, schools, and public safety. As technology evolves, so too do the questions about ethics, privacy, and appropriate responses. Schools across America are exploring ways to leverage AI without creating environments that stifle creativity, expression, or trust among students.

For now, the 13-year-old student remains in custody, and authorities continue to investigate the circumstances surrounding the incident. Meanwhile, the story has captured national attention, illustrating the increasingly complex intersection of youth, technology, and law enforcement. It serves as a cautionary tale for students, parents, and educators alike about the power and consequences of AI surveillance in the digital age.

Ultimately, this case is more than just a headline—it’s a reflection of society’s struggle to adapt to emerging technologies while protecting children, schools, and communities. The Florida arrest is a vivid reminder that as AI becomes a part of everyday life, the responsibilities and ethical considerations surrounding its use become ever more critical. The debate over surveillance, safety, and personal freedom is far from over, and this incident will likely serve as a reference point in discussions about AI in education for years to come.